On Data Flows for Machine Learning Services

Building data flows for Machine Learning systems is the mundane plumbing that ensures that a system is

- transparent and testable

- behaves as expected

- has clear ownership

- is scalable to high volume

- is scalable to many ML services

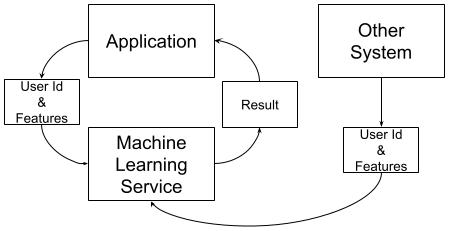

A system is typically setup that will some id (typically a user id) and return some results such as a churn score or a set of recommendations. This is often an Rest or gRPC API call into a separate service running next to a website or web apps. The core of a system of this sort is a machine learning model that takes some data in and calculates some features and then returns a result.

Generally something like,

This is pretty straightforward, the core problem then is Where Does the Machine Learning Model get it's Features from?

- The application - ⚠️ Anti-pattern ⚠️

- Some other system

- The application as raw data - 💫 Best Practice 💫

It's so easy to think of machine learning as just a thing that works and provides these amazing insights and personalization, but without having the data sets in sync and set up properly, the actual experience of developing, debugging, monitoring, and extending can stall out or require excessive maintenance.

Data Flows

- transparent and testable

- behaves as expected

- has clear ownership

- is scalable to high volume

- is scalable to many ML services

A system must

- Be usable in a non-production environment

- Return production quality results in a non-production environment

- Have clear boundaries which team is responsible for what part of the system

- Scale to hundreds, thousands and millions of users

- Scale to 2, 3, 60, 500 machine learning services

Features come from the application - ⚠️ Anti-pattern ⚠️

In this system, the application will make a call to the machine learning system, and it'll give it everything it needs with the call to generate a result.

Pros & Cons

A system of this sort is very easy to develop and deploy, but hard to maintain.

- ✅ Be usable in a non-production environment

- ✅ Return production quality results in a non-production environment

- 🚫 Have clear boundaries which team is responsible for what part of the system

- ✅ Scale to hundreds, thousands and millions of users

- 🚫 Scale to 2, 3, 60, 500 machine learning services

The team developing the features is not typically the same team as is developing the machine learning model so there can be misunderstandings and bugs. There can also be limitations in the features to be developed. Machine learning models often take large amounts of data over time and applications don't always have this so readily available.

This type of system tightly couples the machine learning system to the application, as well as denies the ability for the data used to calculate the features to be genetically available to other machine, learning models.

Features come from some other system

In this system, the application will make a call to the machine learning system, and it will just give some user id that requires a result for.

Pros & Cons

A system of this sort is very easy to develop, deploy and maintain but hard to test.- 🚫 Be usable in a non-production environment

- 🚫 Return production quality results in a non-production environment

- ✅ Have clear boundaries which team is responsible for what part of the system

- ✅ Scale to hundreds, thousands and millions of users

- ✅ Scale to 2, 3, 60, 500 machine learning services

These types of systems are also fairly easy to set up often as batch mode systems that calculate the features separately from the application.

This is a really good place for teams to start if your team or organization has never spun up a machine learning model. It's very understandable, easy to roll out to, but requires more thinking on the testing side.

The main con here is the application and the machine learning responses are not in sync. So a user id in one system may not be useful in another system. This makes testing difficult in non-production environments as either the application will have a user id that will match to a different machine learning result, or the application will have a user id that will match to no result.Features come from the application as raw data - 💫 Best Practice 💫

In this system, the application will send raw data into an aggregator (typically called a feature store), this data will then be made available to the machine learning model for use in calculating a score or recommendations.

Pros & Cons

A system of this sort is very hard to develop and deploy, but easy to test and scale.- ✅ Be usable in a non-production environment

- ✅ Return production quality results in a non-production environment

- ✅ Have clear boundaries which team is responsible for what part of the system

- ✅ Scale to hundreds, thousands and millions of users

- ✅ Scale to 2, 3, 60, 500 machine learning services

This is the most robust pattern available to an organization. It allows for multiple models to leverage the same set of features, as well as separates out the concerns of who maintains the features and how they are calculated from who uses the machine learning result.

This type of system also allows for testing in non-production environments since all the data comes directly from an application.

Bonus: ⚠️ Anti-pattern ⚠️ Features come from both the application and another system 🪢

In this system, the application will make a call to the machine learning system, and it'll give it some of the data it needs with the call to generate a result, with other data coming from some other system.

Pros & Cons

A system of this sort is very hard to develop and deploy, and test and maintain.- 🚫 Be usable in a non-production environment

- 🚫 Return production quality results in a non-production environment

- 🚫 Have clear boundaries which team is responsible for what part of the system

- ✅ Scale to hundreds, thousands and millions of users

- 🚫 Scale to 2, 3, 60, 500 machine learning services

🤪 This type of system design is excellent if you'd like to tie yourself in and not stay up all night and be very unsure if your system is performing as you expected to 🤪

There are no pros to a system of this sort.

Notes on input datasets

Conclusion

Overall, it's best practice if the people and teams that own in the machine learning model also own the construction of the features that power of the model. Systems can be set up in such a way to support this goal or to detract from it.

Goal should be a generic system that powers not one machine learning model, but dozens, allowing for an organization to scale.

(1).png)

(1).png)

(1).png)

(1).png)

(1).png)